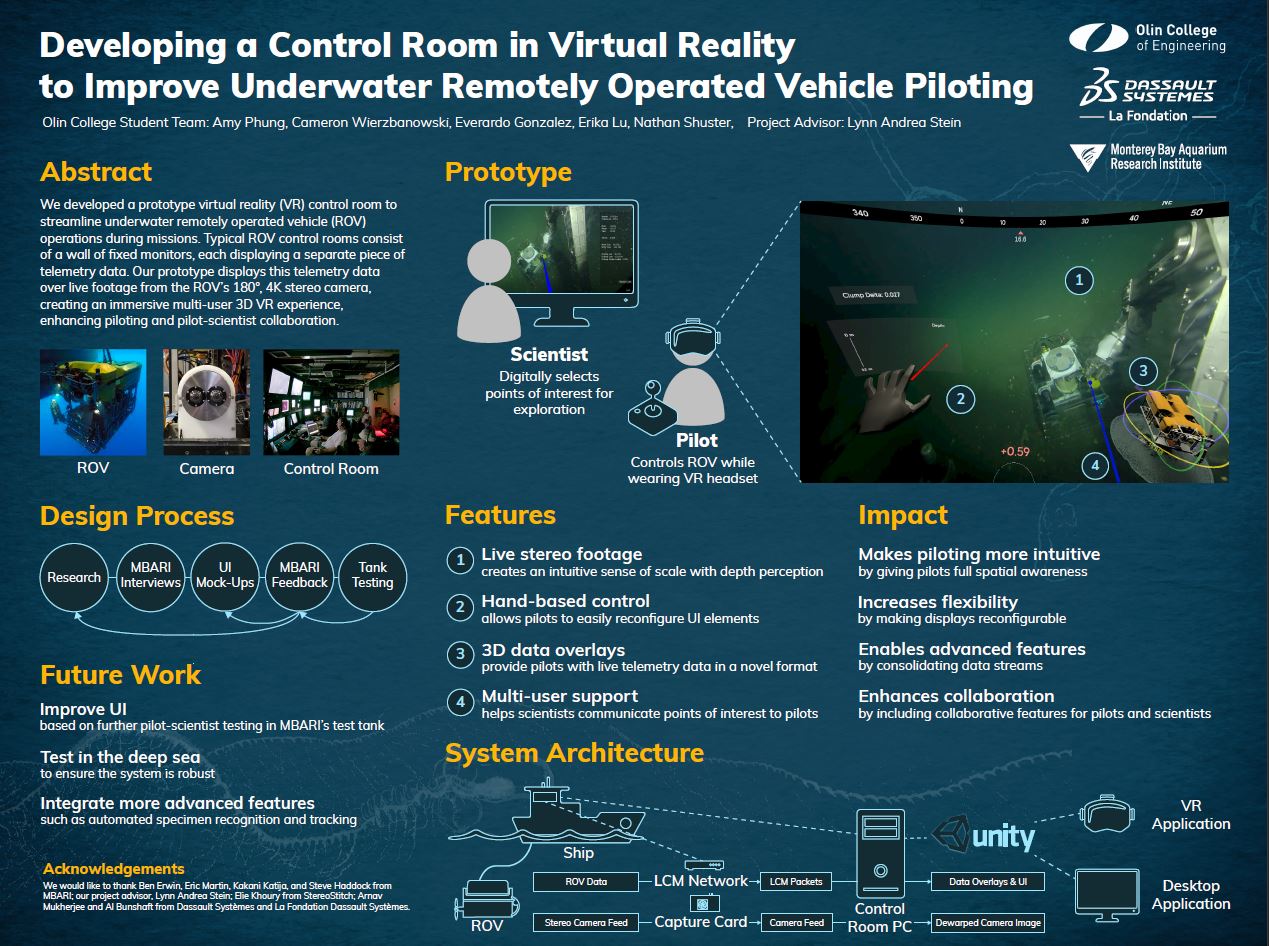

For my senior capstone project at Olin, I led the development of a control room display in virtual reality (VR) to help improve operation of underwater remotely operated vehicles (ROVs). Thanks to generous support from the Dassault Systèmes Foundation, we were able to work on this project in collaboration with the Monterey Bay Aquarium Research Institute (MBARI) over the course of the academic year.

(Click for full-resolution PDF)

Background & Motivation

Typical ROV control rooms consist of a wall of fixed monitors, each displaying a separate piece of telemetry data. Here’s what the current control room looks like

Seated at the center of the displays is the pilot who controls the ROV, and to their right is the scientist who identifies targets of scientific interest. Successfully collecting a sample requires seamless communication between the pilot and scientist, but the existing control room makes this challenging.

In conversations with one of the scientists, they noted that they’ve been using laser pointers to point at objects on the screen to communicate with the pilots, which has a tendency to reflect off of the TV screens into everyone’s eyes. Some additional pain points of the existing control room include the fact that the TV monitors are quite bulky and can’t be reconfigured easily, and that the current control room requires a lot of skill and training to operate due to the significant cognitive load it poses.

Our objective was to create a VR control room prototype to explore the potential of VR for alleviating these pain points and improving collaboration between scientists and ROV pilots.

Our Project

We built our app in Unity, and used Lightweight Communications and Marshalling (LCM) to read live telemetry data from the ROV. Our project also uses live footage from the newly added 180°, 4K stereo-pair fisheye camera onboard the ROV and computes the reprojections necessary to create an immersive, 1:1 scale, 3D view of the ROV’s surroundings in VR. Providing ROV pilots with a wide field of view, without the distortion that results from projecting raw footage onto a flat screen, should improve pilots’ spatial awareness during operation.

Visual representation of the stereo footage that

produces a 3D view in a VR headset

To begin incorporating the functionality of the existing control room into our application, our app incorporates the real-time telemetry data into the 3D environment and adds multi-user support to allow pilots and scientists to collaborate in VR.

Desktop app allows seamless communication

while pilot is wearing VR headset

Our app also includes hand and gaze-based controls that enable pilots to rearrange the displays, a feature not possible with fixed monitors.

Our VR app allows pilots to quickly

rearrange displays with their hands

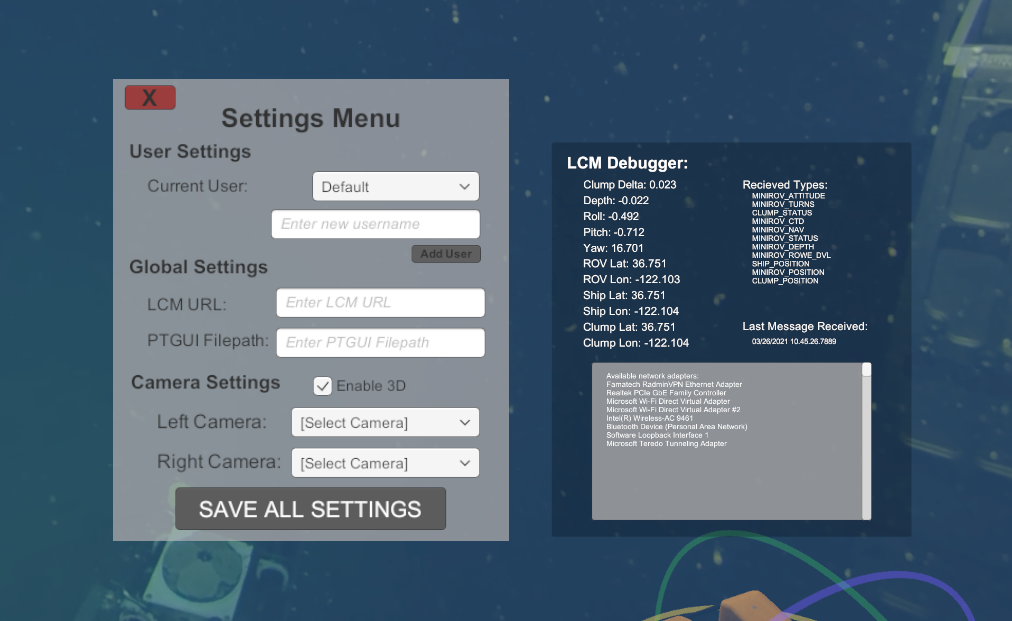

Since pilots have individual preferences for how these displays are arranged, we included an option to save their display preferences, allowing for seamless transitions between pilots.

Pop-up settings menu and LCM data debugger

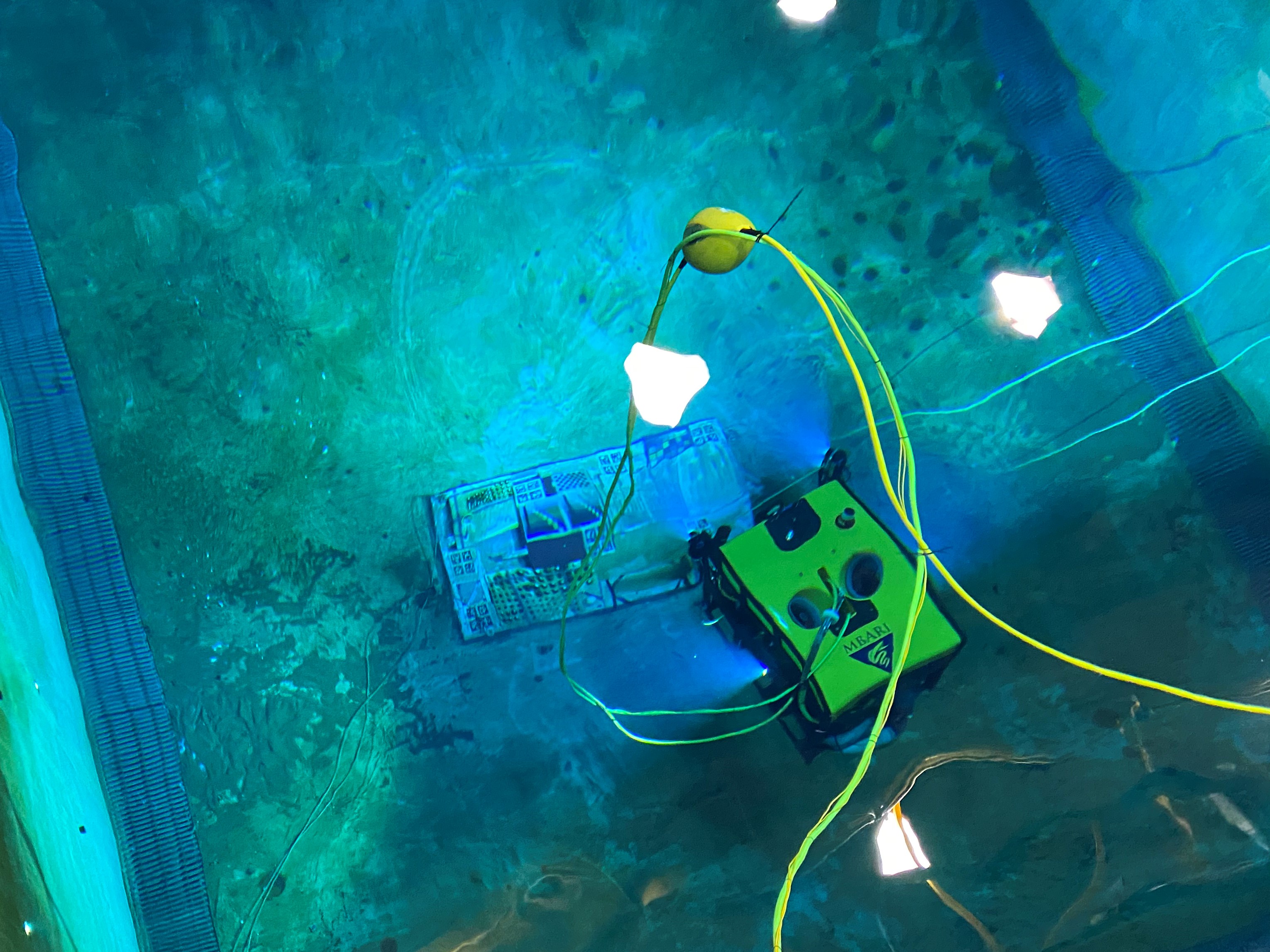

We demonstrated our application during the operation of MBARI’s MiniROV in a 1 million liter saltwater test tank facility and had several ROV pilots try out our app. The response was fantastic - most of the pilots we spoke to said they were extremely excited to test it out at sea later in the upcoming summer!

My primary technical contributions to this project included the stereo reprojection shader, implementing the stereo-camera-to-Unity pipeline, implementing the hand, controller, and foot/gaze based controls, and creating the LCM debugging window.

Next Steps

Although this may be the conclusion of our work, this project will continue to live on - MBARI plans to take our app to sea for further testing during the summer, and a research group at UC Santa Cruz is looking to do in-depth user studies with our app to quantify whether or not our app actually reduces task completion time. Other avenues of improvement include integrating advanced features like MBARI’s ongoing work on machine-learning based organism tracking, and continuing to make other general UI revisions. There’s also discussion at MBARI about potentially using the results from this testing to create a control room designed around VR for their new ship that will eventually replace the one used for their ROV Doc Ricketts.